Showing results for virg xped new promo code tanzania

FPF Year in Review 2025

[…] and Part 4 (Responsible Design and Risk Management). Consent for Processing Personal Data in the Age of AI: Key Updates Across Asia-Pacific From India’s DPDPA to Vietnam’s new Decree and Indonesia’s PDPL, the Asia-Pacific region is undergoing a shift in its data protection law landscape. This issue brief provides an updated view of evolving […]

Abstract,Big,Data,Visualization.,Big,Data,Code,Representation.,Futuristic,Network

abstract,big,data,visualization.,big,data,code,representation.,futuristic,network

FPF releases issue brief on Vietnam’s Law on Protection of Personal Data and the Law on Data

[…] addressing overlapping domains of data protection, data governance, and emerging technologies. Read the Issue Brief here This Issue Brief analyzes the two laws, which together define a new, comprehensive regime, for data protection and data governance in Vietnam. The key takeaways from this joint analysis show that: The new PDP Law elevates and enhances […]

FPF Issue Brief – Making Sense of Vietnam’s Latest Data Protection and Governance Regime

[…] 25 T a b le of Con te n ts 1 . I n tr o d uctio n … ………………………………………… ……………………………………………………………………………………. 2 2 . T he new PD P La w ele va te s an d en h an ce s data pro te ctio n in Vie tn am by pre […]

Five Big Questions (and Zero Predictions) for the U.S. Privacy and AI Landscape in 2026

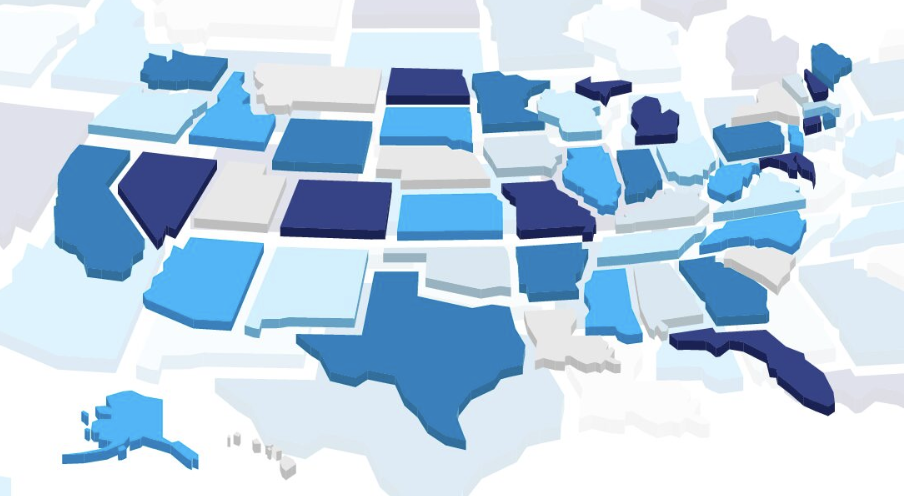

[…] year was more due to chance than anything else, and next year will return to business-as-usual. After all, Alabama, Arkansas, Georgia, Massachusetts, Oklahoma, Pennsylvania, Vermont, and West Virginia all had bills make it to a floor vote or progress into cross-chamber, and some of those bills have been carried over into the 2026 legislative […]

Youth Privacy in Australia: Insights from National Policy Dialogues

Throughout the fall of 2024, the Future of Privacy Forum (FPF), in partnership with the Australian Academic and Research Network (AARNet) and Australian Strategic Policy Institute (ASPI), convened a series of three expert panel discussions across Australia exploring the intersection of privacy, security, and online safety for young people. This event series built on the […]

Youth Privacy in Australia: Insights from National Policy Dialogues

Throughout the fall of 2024, the Future of Privacy Forum (FPF), in partnership with the Australian Academic and Research Network (AARNet) and Australian Strategic Policy Institute (ASPI), convened a series of three expert panel discussions across Australia exploring the intersection of privacy, security, and online safety for young people. This event series built on the […]

FPF Youth Privacy in Australia

[…] by lessons learned from regulating prior technologies—especially social media. Several experts stressed that generative AI, while novel in form, often amplifies well-known harms rather than creating entirely new ones. One framework presented at the events asked: Is the harm new? A magnified prior harm? Or a misunderstood repeat? This model encourages policymakers to distinguish […]

2025 FPF Privacy Communities

[…] For those without a Member Portal account, click here to request Portal access and FPF will send an onboarding email with details on how to access your new account. If you have any questions or issues accessing your Portal profile, please email [email protected] . Ad Practices – Monthly call on the first Thursday of […]

FPF Releases Updated Report on the State Comprehensive Privacy Law Landscape

The state privacy landscape continues to evolve year-to-year. Although no new comprehensive privacy laws were enacted in 2025, nine states amended their existing laws and regulators increased enforcement activity, providing further clarity (and new questions) about the meaning of the law. Today FPF is releasing its second annual report on the state comprehensive privacy […]