FPF and Washington & Lee University Law School Announce Partnership

DC-BASED PRIVACY THINK TANK FUTURE OF PRIVACY FORUM PARTNERS WITH WASHINGTON and LEE UNIVERSITY SCHOOL OF LAW TO CREATE UNIQUE ACADEMIC-PROFESSIONAL PARTNERSHIP

Affiliation to Advance Privacy Scholarship, Create Business/Academic Ties, and Incubate Tomorrow’s Privacy Lawyers

WASHINGTON, D.C. & LEXINGTON, Va. – Thursday, October 29, 2015 – The Future of Privacy Forum (FPF) and Washington and Lee University School of Law today announced a unique strategic partnership designed to enrich the legal academic experience and to enhance scholarship and conversations about privacy law and policy.

The FPF/W&L Law collaboration will:

- Include new curricula for W&L Law students

- Create internships for students with both FPF and its Advisory Board companies

- Involve W&L Law Faculty in FPF Conferences and Research Initiatives

- Provide a Washington, D.C. home in FPF’s new offices for classes associated with the W&L third-year D.C. program

“This partnership is such a great opportunity to combine the resources and talent of a top-tier law school with the mission and objectives of a privacy-focused think tank,” said Christopher Wolf, co-chair of FPF. “FPF policy staff and fellows and W&L Law students and faculty already are working together on issues such as the privacy of data collected by connected cars and the ethical review processes for big data. As a 1980 graduate of W&L Law, I am so pleased to have brought together my law school with the Future of Privacy Forum, the think tank I founded in 2008.”

W&L Law Dean Brant Hellwig said “Through this partnership, we will expand our footprint in Washington, creating even more opportunities for our students in Lexington and in the D.C. program.” “It also leverages our growing faculty expertise in privacy and national security law, so we can have a larger impact on policy deliberations.”

FPF Executive Director Jules Polonetsky added: “We are thrilled that as another feature of the partnership, W&L Law professors Margaret Hu and Joshua Fairfield will serve on the FPF Advisory Board. Professor Hu is well-known for her research on national security, cyber-surveillance and civil rights, and her recent writing on government use of database screening and digital watch listing systems to create “blacklists” of individuals based on suspicious data. Professor Fairfield is an internationally recognized law and technology scholar, specializing in digital property, electronic contract, big data privacy, and virtual communities.”

On Thursday, November 5, FPF and W&L Law are celebrating the partnership, along with the opening of FPF’s new headquarters in Washington, with a panel discussion addressing the future of Section 5 of the FTC Act. Former FTC Consumer Bureau Director David Vladeck and James Cooper, former Acting Director, FTC Office of Policy Planning, will discuss key Section 5 issues – such as materiality, harm, the role of cost benefit analysis and other issues raised in the FTC’s privacy and data security actions. The program will take place from 5:00 p.m. to 7:00 p.m. and will be followed by an open house reception at FPF offices, 1400 Eye Street, N.W., Suite 450, Washington, D.C. 20005.

About Future of Privacy Forum

The Future of Privacy Forum (FPF) is a Washington, DC based think tank that seeks to advance responsible data practices. The forum is led by Internet privacy experts Jules Polonetsky and Christopher Wolf and includes an advisory board comprised of leading figures from industry, academia, law and advocacy groups.

About Washington & Lee School of Law

Washington and Lee University School of Law in Lexington, Virginia is one of the smallest of the nation’s top-tier law schools, with an average class size of 22 and a 9-to-1 student-faculty ratio. The Law School’s commitment to student-centered legal education, emphasis on legal writing, and dedication to professional development is reflected in the impressive achievements of its graduates, which include seven American Bar Association presidents, 22 members of the U.S. Congress, numerous state and federal judges, and Supreme Court Justice Lewis F. Powell.

Media Contacts

Nicholas Graham, for FPF: 571-291-2967, [email protected]

Peter Jetton, for W&L Law: 540-461-1326, [email protected]

Cross-Device: Understanding the State of State Management

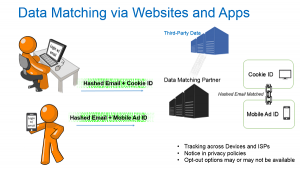

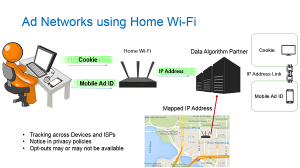

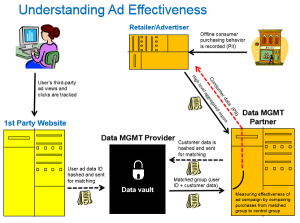

On Friday, October 16, the Future of Privacy Forum filed comments with the FTC in advance of the FTC’s Cross Device Workshop on Nov. 16, 2015. Jules Polonetsky and Stacey Gray have prepared a report,

Cross-Device: Understanding the State of State Management, based on revisions to FPF’s

comments filed with the FTC on October 16th, that aims to describe how and why the advertising and marketing industries are using emerging technologies to track individual users across platforms and devices.

In the first decades of the Internet, the predominant method of state management–the ability to remember a unique user over time–was the “cookie.” However, because of how cookies operate, via the web browser placing a data file onto a user’s hard drive, this model is becoming increasingly ineffective at tracking user behavior across different browsers and devices. The fact that modern users are now accessing online content and resources through a broadening spectrum of devices–e.g. laptop, smartphone, tablet, watch, wearable fitness tracker, television, and other internet-connected home appliances–is creating a real challenge for advertisers and marketers who seek to holistically analyze consumer behavior. In these comments, we explain the challenges and some of the emerging technological solutions, each of which presents nuanced differences in privacy benefits and concerns.

Be sure to check out a few of our helpful ad tech flow charts:

NHTSA & FTC Critical of House Vehicle Safety Proposal

October 14, 2015 — The House Energy and Commerce Subcommittee on Commerce, Manufacturing, and Trade met to discuss proposals to improve motor vehicle safety. Much of the hearing focused on a recent proposal by committee staff to incentivize the adoption of new technologies to improve vehicle safety, which raises several privacy issues. Specifically, privacy and cybersecurity protections were highlighted, as well as the roles of the National Highway Traffic Safety Administration (“NHTSA”) and the Federal Trade Commission (“FTC”). Chairman Fred Upton (R-MI) set the tone for this conversation with his opening statements, stating that “what was once science fiction, is today a reality.” Representative Marsha Blackburn (R-TN) emphasized that there will be a quarter billion connected cars by 2020.

The first panel consisted of testimony by representatives of NHTSA and the FTC. Both representatives were critical of certain aspects of the draft.

Dr. Mark R. Rosekind, Administrator of NHTSA, raised several concerns regarding the draft proposal during his testimony. First, he argued the proposal gives car manufacturers the power to create best practices that may undermine NHTSA’s mission. Though the draft requires automakers to submit these best practices to NHTSA, it does not give NHTSA the authority to accept or propose changes. Additionally, the draft does not provide NHTSA enforcement authority. Instead, the draft allows for fines of up to $1 million for violations of privacy policies. Rosekind argued, however, that these fines do not present sufficient deterrent effect. Second, Rosekind suggested that best practices should be developed in conjunction with NHTSA, not by an industry majority. The proposal establishes a council for the development of best practices that would be comprised of at least 50 percent of car manufacturer representatives.

Finally, he argued that NHTSA is better positioned to address vehicle technology concerns because of its experience in this area. NHTSA has a council for vehicle electronics, which has been looking at these issues since 2012. Moreover, NHTSA and FTC have met, as recently as last year, to discuss Vehicle-to-Vehicle (“V2V”) technology, and to coordinate their efforts. He supported a collaborative approach with industry on vehicle technologies, pointing to the Automatic Emergency Braking Systems (“AEB”) model as one technology where industry successfully cooperated with NHTSA to successfully create and institute industry best practices.

Maneesha Mithal from the Division of Privacy and Identity Protection at the FTC’s Bureau of Consumer Protection identified three problems with the draft proposal. First, there is no FTC enforcement authority for manufacturers who do not follow adopted best practices or engage in deceptive practices. The draft provides manufacturers with a safe harbor, placing manufacturers outside of the FTC’s authority once they have adopted best practices. Ms. Mithal believes this safe harbor is too broad and provided an example. Because the privacy requirements of the draft only apply to vehicle data collected from owners, renters or lessees, a manufacturer could misrepresent how they are collecting consumer data on their website and the FTC could not bring action. Second, the draft contains a provision denying researchers the ability to identify vulnerabilities in vehicle systems, i.e., the draft de-incentivizes research and other positive uses of data, imposing penalties of up to $100,000 for hacking. Third, she suggested that allowing car manufacturers to independently develop best practices might guarantee weaker regulations.

Elaborating on the third point, Ms. Mithal stated that though the draft identifies eight areas to be considered in the development of best practices, these are optional. Further, there is no requirement to update best practices as new technologies enter the market and NHTSA is given too little discretion. In her concluding remarks, Ms. Mithal stated the staff draft provides substantial liability protection to car manufacturers in an exchange for weak practices developed by an industry majority.

After the panel of regulators, representatives from the two major automotive trade associations provided testimony. Several key points were made on the subject of privacy and cybersecurity.

Mitch Bainwol, President and CEO of the Alliance of Automobile Manufacturers, emphasized the great investments being made by manufacturers in the development and adoption of safety measures. Mr. Bainwol cited a statistic estimating smart vehicle investments by manufacturers of new vehicles at $175 million per year. Additionally, Mr. Bainwol pointed to a claim by NHTSA that developments in technologies that reduce driver error could prevent up to 80% of driver error related accidents.

John Bozzella, President and CEO of Global Automakers, began his testimony by listing the issues that presently command his attention, the adoption of connected cars in particular. He emphasized that (i) the benefits of connected car technologies significantly outweigh the challenges; (ii) automakers regularly engage with privacy advocates; and (iii) there is a conscious effort in the industry to stay ahead of privacy and cybersecurity concerns. Speaking to the last point, he spoke of the auto industry’s Intelligence Sharing and Analysis Center (“ISAC”) and the positive effect it will have on many of these concerns.

The Future of Privacy Forum continues to monitor legislative efforts for privacy in connected cars and related technologies. We look forward to working with automakers on how to protect privacy and ensure the adoption of safe and innovative vehicles technologies.

Hector DeJesus, Legal Extern, Future of Privacy Forum

End of Safe Harbor? Understanding the CJEU’s Decision and Its Implications

Legal background

In Europe, the processing of personal data is governed by Directive 95/46/EC (the “Directive”) which sets forth rules under which data may be lawfully processed1.The transfer of personal data to third countries is restricted to countries that ensure an adequate level of protection2.While the Directive itself does not provide a definition of the concept of an adequate level of protection, article 25.2 states that “the adequacy of the level of protection afforded by a third country shall be assessed in the light of all the circumstances surrounding a data transfer operation or set of data transfer operations” and lists non-exhaustive criteria to consider when making such determination3. Pursuant to article 25.6 of the Directive, the Commission may find (…) that a third country ensures an adequate level of protection (…) by reason of its domestic law or of the international commitments it has entered into (…) for the protection of the private lives and basic freedom and rights of individuals.

On this basis, the U.S. and E.U. negotiated and established a framework ensuring an adequate level of protection for data transfers from the EU to organizations established in the US (the “Safe Harbor”). In 2000, the Commission adopted a decision (Decision 2000/520) declaring that the Safe Harbor framework ensured adequate level of protection, therefore allowing lawful transfer of data from the EU to the US4. However, the status of the US-EU Safe Harbor was called into question in November 2013 after Edward Snowden’s revelations regarding US mass surveillance programs5.

Background of the case

In 2012, Maximillian Schrems, an Austrian law student who has been a Facebook user since 2008, requested the company to provide him with a copy of all the data it holds about himself. Later on, he filed a complaint with the Irish Data Protection Commissioner (“DPC”) in which he asked the DPC to exercise its power by prohibiting Facebook Ireland to transfer his personal data to the US.6 Mr. Schrems contended in his complaint that the law and practice in force in the US did not ensure adequate protection of the personal data held in its territory against the surveillance activities that were engaged by the public authorities. The DPC rejected his complaint, considering that there was no actual evidence that his data had been accessed by the NSA and added that the allegations raised by Mr. Schrems could, in any case, not be put forward as the Commission had already found in its Decision 2000/520 that the United Stated ensured an adequate level of protection. Mr. Schrems then went before the Irish High Court which adjourned the case to refer to the Court of Justice of the European Union (“CJEU”).

Questions before the CJEU

The CJEU addressed two questions in its judgment:

- Is the Data Protection Commissioner bound by the Commission’s decision that finds that the US ensures adequate level of protection?

- Is decision 2000/520 valid?

Ruling

The Court ruled that:

- National DPAs are not bound by a decision adopted by the Commission on the basis of Article 25.6 of the Directive. They therefore have to investigate a claim lodged by an individual concerning the protection of his rights in regards of personal data relating to him and which has been transferred from the EU to a third country when that person contends that that country does not actually provide an adequate level of protection.

A decision adopted pursuant to Article 25.6 of the Directive (such as the Commission Decision 2000/520/EC of 26 July 2000), by which the European Commission finds that a third country ensures an adequate level of protection, “does not prevent a supervisory authority [data protection authority] of a Member State from examining the claim of a person concerning the protection of his rights and freedoms in regard to the processing of personal data relating to him which has been transferred from a Member State to that third country when that person contends that the law and practices in force in the third country do not ensure adequate level of protection “

- Decision 2000/520 is invalid.

CJEU judgment

Is a national DPA bound by a Commission decision?

In its decision, the Court highlights the importance of interpreting the Directive “in the light of the fundamental rights guaranteed by the Charter”, notably the right to respect for private life. Consequently, the independence of national Data Protection Authorities (“DPA”) must be regarded as an essential component of the protection of individuals with regard to the processing of personal data. The Court recognized that DPAs’ power is limited to processing of personal data carried out in their territory. However, it considers that “the operation consisting in having personal data transferred from a Member State to a third country constitutes, in itself, processing of personal data carried out in a Member State.” Each DPA is therefore vested with the power to check whether a transfer of personal data from its own Member State to a third country complies with the requirements laid down by the Directive7.

Commission decisions (adopted on the basis of Article 25.6 of the Directive) are binding on all Member States and all their organs8. The CJEU only has jurisdiction to declare such decision invalid9 in order to guarantee legal certainty by ensuring that EU law is applied uniformly. However, the Commission decision cannot prevent individuals from their right to lodge a claim with their national DPA10.Likewise, such decision “cannot eliminate or reduce the powers expressly accorded to the national supervisory authorities” [DPA] both by the Charter and the Directive11.

Is the Commission Decision 2000/520 valid?

According to the Court, although the Directive does not contain any definition of adequate level of protection, it must be understood as requiring the third country to ensure in fact a level of protection of fundamental rights and freedoms that is essentially equivalent to that guaranteed within the EU12.It is incumbent to the Commission to check periodically whether the finding relating to the adequacy of the level of protection ensured by the third country in question is still factually and legally justified. Such a check is required, in any event, when evidence gives rise to a doubt in that regard13 (referring to the Snowden revelations).The Court judged that it was not even necessary to examine the content of the Safe Harbor principles as Article 1 of the Commission Decision does not comply with Article 25.6 of the Directive. Derogations and limitations in relation to the protection of personal data need to be applied “only in so far as is strictly necessary”14and the Court considered that the bulk collection of data operated by the NSA “without any differentiation, limitation or exception being made in the light of the objective pursued and without an objective criterion being laid down by which to determine the limits of the access of the public authorities to the data, and of its subsequent use, for purposes which are specific, strictly restricted and capable of justifying the interference which both access to that data and its use entail”15 was violating the principles of the Directive.

Secondly, the Safe Harbor deprived individuals from pursuing legal remedies in order to have access to personal data relating to him, or to obtain the rectification or erasure of such data which does not respect the essence of the fundamental right to effective judicial protection, as enshrined in the Charter16.

Thirdly, by limiting the DPA’s power to investigate, the Commission, in its Decision 2000/520, exceeded the power conferred upon it in Article 25.6 of the Directive17.

Key points to remember

- A data protection authority (supervisory authority) of a Member State is not bound by a Commission Decision adopted on the basis of Article 25.6 of the Directive (Decision in which the Commission can declare that a third country provides adequate level of protection)

- The CJEU has exclusive jurisdiction to declare an EU act (such as Decision 2000/520) invalid. Therefore, if a DPA examines a claim and finds it well founded, it has to go before a National Court which will have to request the CJEU for a preliminary ruling on the validity of the Decision. Neither a DPA nor a National Court can declare such decision invalid.

- Individuals have the right to file a claim with their local DPA in regards of the protection of their rights. The DPA must be able to examine the claim, with complete independence, to determine whether the processing (transfer of data in that instance) complies with the requirements laid down by the Directive.

- The Safe Harbor was declared invalid as the bulk collection of data violates the requirement under which derogations need to be made in so far as is strictly necessary and as the lack of possibility for individuals to have access to legal remedies violates the fundamental rights contained in the Charter and the Directive.

Consequences of the CJEU decision

Some believe the CJEU reached this decision not because of companies’ behavior but rather because the US government was compelling companies to provide information under Section 702. This historical decision should provide momentum for Congress to amend section 702 and undertake reforms which could include greater ability to challenge, greater transparency, allowing surveillance only for limited purposes, prohibiting upstream acquisition, applying better limits on dissemination as well as on the retention of data.

Data transfers do not stop overnight and considering the load of work on DPAs, there should be a grace period that companies should use to find a way forward. The bigger risk regarding enforcement probably comes from EU citizens who have the right to file complaints with their local DPA.

What are some other ways to transfer data from the EU to the US?

Alternative mechanisms to consider include:

- Binding Corporate Rules18

- Data transfer agreements based on the EU Commission Model Clauses19

- Consent20

Are the other Commission decisions determining adequate level of protection for other countries still valid?

The decision does not apply to other adequacy decisions which remain binding on all Member States until they are actually declared invalid. As it was explained above, such decision can only be declared invalid by the CJEU. Therefore, it would require going through another lengthy process of complaints to a DPA, national court and the CJEU. For now, other adequacy decisions and other mechanisms still stand.

What should companies do?

The best step for companies at this point is likely to wait for the guidance that should be issued by the European DPAs in the very near future.

Indeed, as announced by the Article 29 Working Party, data protection authorities from Member States are to meet soon in order to issue guidance and provide a coordinated response to the Court’s decision21.

Information Commissioner Christopher Graham advised in London on 8 October: “Don’t panic.” The ICO’s office will not be “knee-jerking into sudden enforcement of a new arrangement. We are coordinating our thinking very much with the other data protection authorities across the EU.”22 This view was confirmed by the Dutch and French DPAs in their respective declaration23addressing the CJEU decision. Each of them referred to the announcement of the emergency meeting made by the Article 29 Working Party.24

Some companies are already taking steps to implement alternative mechanisms such as model contracts25. Since model contracts have no mechanism to over-ride national surveillance or law enforcement access to data, there is no logical reason for them to be an acceptable legal alternative to the Safe Harbor. However, they technically are and will be valid until Schrems or another critic brings the same legal action to the courts. Perhaps, this crisis will lead to a relatively quick political solution which will provide an alternative to Safe Harbor. If not, companies can at that time assess the available options.

-Bénédicte Dambrine, Legal Fellow

—

1 Directive 95/46/EC of the European Parliament and of the Council of 24 October 1995 on the protection of individuals with regard to the processing of personal data and on the free movement of such data (http://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=CELEX:31995L0046&qid=1444227336670&from=EN)

2 Article 25.1 of the Directive

3 Articles 25.2 and 25.4 of the Directive

4 http://eur-lex.europa.eu/LexUriServ/LexUriServ.do?uri=CELEX:32000D0520:EN:HTML

5 Communication from the Commission to the European Parliament and the Council entitled ‘Rebuilding Trust in EU-US Data Flows’ (COM(2013) 846 final, 27 November 2013) and Communication from the Commission to the European Parliament and the Council on the Functioning of the Safe Harbour from the Perspective of EU Citizens and Companies Established in the EU (COM(2013) 847 final, 27 November 2013).

6 CJEU case C-362/14 of 6 October 2015 (http://curia.europa.eu/juris/document/document.jsf?text=&docid=169195&pageIndex=0&doclang=EN&mode=req&dir=&occ=first&part=1&cid=293038)

7 Section 47 of the Decision

8 Section 51 of the Decision

9 Section 61 of the Decision

10 Section 53 of the Decision

11 Section 53 of the Decision

12 Section 73 of the Decision

13 Section 76 of the Decision

14 Section 92 of the Decision

15 Section 93 of the Decision

16 Section 95 of the Decision

17 Section 103 of the Decision

18 http://ec.europa.eu/justice/data-protection/international-transfers/binding-corporate-rules/index_en.htm

19 http://ec.europa.eu/justice/data-protection/international-transfers/transfer/index_en.htm

20 Article 26.1(a) of the Directive

21 http://ec.europa.eu/justice/data-protection/article-29/press-material/press-release/art29_press_material/2015/20151006_wp29_press_release_on_safe_harbor.pdf

22 https://iapp.org/news/a/icos-graham-dont-panic

23 http://www.cnil.fr/linstitution/actualite/article/article/invalidation-du-safe-harbor-par-la-cour-de-justice-de-lunion-europeenne-une-decision-cl/

24 http://ec.europa.eu/justice/data-protection/article-29/press-material/press-release/art29_press_material/2015/20151006_wp29_press_release_on_safe_harbor.pdf

25 https://www.linkedin.com/pulse/safe-harbor-dead-official-statements-marius