Privacy Features of iOS 12 and MacOS Mojave

With much media attention focused on new Apple hardware, including new iPhones, Apple also released updated versions of its mobile and desktop operating systems for public download this week. The software upgrades (iOS 12 for iPhones, and macOS 10.14 Mojave for desktop Macs) bring many new features, such as Group FaceTime, options to customize notifications, and aesthetic changes such as an optional desktop “Dark Mode.”

Amidst these upgrades, what’s new for data privacy? Consumers are increasingly aware of privacy issues, and Apple has articulated the company’s commitment to privacy “as a human right.” Meanwhile, regulators are entering the consumer privacy debate, with this week’s Senate hearing bringing further attention to the data practices and policy positions of leading technology companies.

In their Fall updates, Apple improves several existing privacy controls for iPhone users, and MacOS 10.14 brings privacy-focused technical modifications. Several of these updates were first announced at Apple’s June 2018 Worldwide Developer Conference (WWDC), which we discussed (along with major updates to Google’s Android P) earlier this summer, and have now been released to the public.

Below, we provide round-ups of privacy updates in iOS 12, macOS 10.14 (Mojave), and the App Store Review Guidelines.

Privacy Updates in iOS 12

The following privacy updates are included in iOS 12, which can be downloaded on devices as old as the iPhone 5s and iPad Air.

- USB Restricted Mode: In July 2018, Apple released iOS 11.4.1 and introduced USB Restricted Mode. This feature requires iPhone users to input their passcode to unlock the phone when connecting it to a USB accessory if the phone has been locked for an hour or more. iOS 12 implements additional USB restrictions, including disabling USB connections immediately after the device locks if more than three days have passed since the last USB connection. This increases protection for users that don’t often make such connections. Overall, the USB Restricted Mode makes it much more difficult for an unauthorized person or entity, such as a stalker or phone thief, to unlock a user’s phone without permission.

- On-Device Machine Learning for Siri Suggestions: A new feature called Siri Suggestions uses machine learning to decide what apps and shortcuts to surface as a banner on the iPhone home screen. Siri Suggestions will be based on users’ patterns from signals like location, time of day and type of motion (e.g., walking, running, or driving). iOS 12 analyzes this data locally on the device rather than on remote servers, providing users with personalized experiences while limiting access to the underlying information.

Privacy Updates in macOS 10.14 (Mojave)

- iOS-Style Permissions for Desktop Apps: MacOS apps will now be required to request the user’s permission to access certain device sensors, such as the camera or microphone. These permissions have long been standard on iOS and other mobile operating systems.

- Intelligent Tracking Prevention 2.0: Building on a feature introduced last year in the Safari browser, Apple is introducing Intelligent Tracking Prevention 2.0 (ITP 2.0) as a default feature for Safari in macOS Mojave. Earlier versions of ITP used machine learning to prevent websites from placing cookies that were identified as having “tracking abilities” after a 24-hour window. ITP 2.0 expands on this feature by immediately partitioning such third-party cookies. As a result, Safari will now prevent most website tracking from social media “Share” and “Like” buttons and other embedded content, unless the user consents to the data collection in a browser-prompted notification.

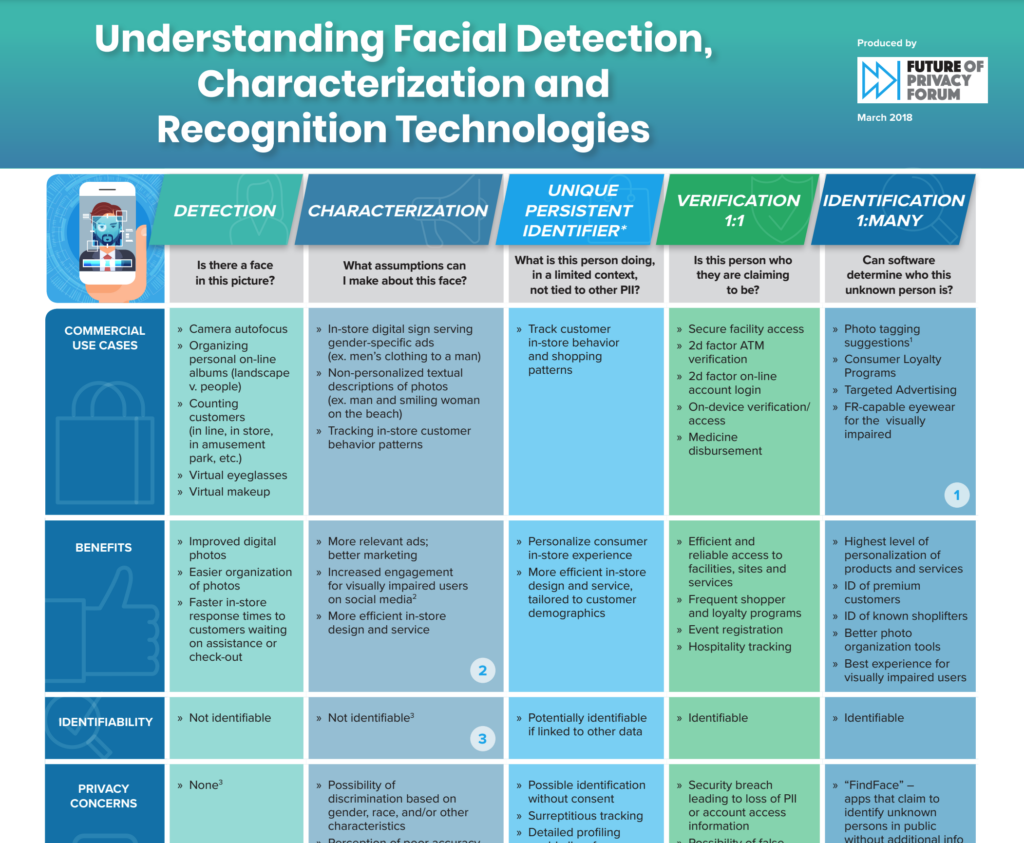

- Obfuscation of Device Fingerprints: Safari in macOS Mojave will contain updates designed to prevent device fingerprinting. As FPF described in a 2015 report on cross-device tracking, devices and browsers can be identified with a degree of probability through metadata sent in web traffic – such as the system fonts, screen size, installed plug-ins, etc. This kind of digital “fingerprinting,” often referred to as server-side recognition, is often used for short-term advertising attribution and measurement. In Mojave, the Safari web browser will present websites with a “simplified system configuration,” in order to make many users’ “fingerprints” appear identical or very similar – reducing the efficacy of server-side recognition technologies.

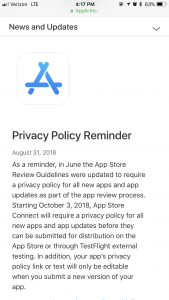

Privacy Updates for Developers (App Store Review Guidelines)

In addition to technical updates to their operating systems, Apple has also made significant changes to its App Store Review Guidelines, the rules for how developers may collect and use personal information from users. These Guidelines, which apply to the third party developers who provide apps through the App Store, can be very influential when paired with robust oversight. In May, Apple began removing apps from the App Store for violations of policies against sharing location data with third-party advertisers without users’ consent. In August, Apple removed apps from the App Store that violated its policies against collecting data to build user profiles or contact databases.

Updates to the Guidelines include:

- Developers are not permitted to create databases from users’ address book information (contact lists and photos). (5.1.2)

- Developers must clearly describe new features and changes in the “What’s New” section of the App Store. (2.3.12)

- Developers must request explicit user consent and provide a “clear visual indication when recording, logging, or otherwise making a record of user activity.” (2.5.14)

- Developers must provide users with all information used to target a user with an ad without leaving the app. (3.1.3(b))

- All apps must include a link to their privacy policy in the App Store Connect metadata field and within the app. (5.1.1)

- Developers must include a mechanism to revoke social network credentials and disable data access between the app and social network from within the app. (5.1.1)

- Developers must respect the user’s permission settings and not attempt to manipulate, trick, or force people to consent to unnecessary data access. (5.1.1)

- New language in the Developer Code of Conduct states:

“Customer trust is the cornerstone of the App Store’s success. Apps should never prey on users or attempt to rip-off customers, trick them into making unwanted purchases, force them to share unnecessary data, raise prices in a tricky manner, charge for features or content that are not delivered, or engage in any other manipulative practices within or outside of the app.” (5.5)

Summary

Amidst new hardware and design features, Apple has introduced important technical updates to iOS 12 and MacOS that aim to empower users to better manage their information. Developers should also take note of significant changes to the App Store Review Guidelines, which help determine the ways in which apps and app partners can collect and handle user data. These changes help provide users with the ability to make more informed decisions that reflect their privacy preferences.