AI Audits, Equity Awareness in Data Privacy Methods, and Facial Recognition Technologies are Major Topics During This Year’s Privacy Papers for Policymakers Events

Author: Judy Wang, Communications Intern, FPF

The Future of Privacy Forum (FPF) hosted two engaging events honoring 2023’s must-read privacy scholarship at the 14th Annual Privacy Papers for Policymakers ceremonies.

On Tuesday, February 27, FPF hosted a Capitol Hill event featuring an opening keynote by U.S. Senator Peter Welch (D-VT) as well as facilitated discussions with the winning authors: Mislav Balunovic, Emily Black, Albert Fox Cahn, Brenda Leong, Hideyuki Matsumi, Claire McKay Bowen, Joshua Snoke, Daniel Solove, and Robin Staab. Experts from academia, industry, and government moderated these policy discussions, including Michael Akinwumi, Didier Barjon, Miranda Bogen, Edgar Rivas, and Alicia Solow-Niederman.

On Friday, March 1, FPF honored winners of internationally focused papers in a virtual conversation hosted by FPF Global Policy Manager Bianca-Ioana Marcu, with FPF CEO Jules Polonetsky providing opening remarks. Watch the virtual event here.

For the in-person event on Capitol Hill, Jordan Francis, FPF’s Elise Berkower Fellow, provided welcome remarks and emceed the night, thanking Alan Raul, FPF Board President, and Debra Berlyn, FPF Board Treasurer, for being present. Mr. Francis noted he was excited to present leading privacy research relevant to Congress, federal agencies, and international data protection authorities (DPAs).

FPF’s Jordan Francis

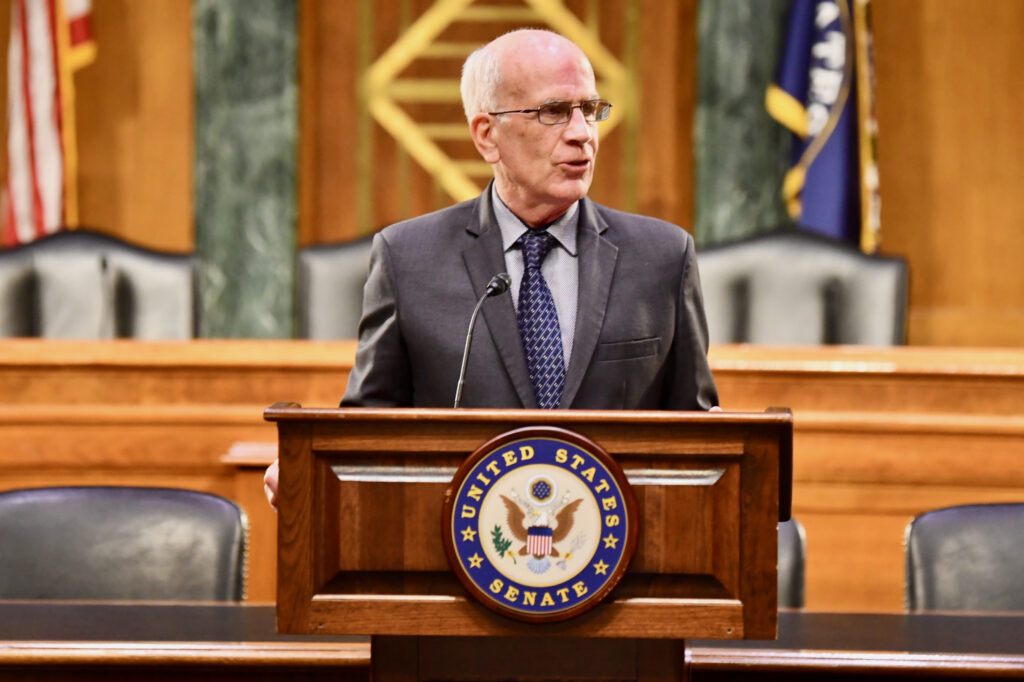

In his keynote, Senator Welch celebrated the importance of privacy and the pioneering work done by this year’s winners. He emphasized that privacy is a right that should be protected constitutionally and that researchers studying digital platforms are essential for understanding evolving technologies and their impacts on our privacy. He also told the authors that their scholarship is consistent with the pioneering work of Justice Louis Brandeis and Samuel Warren, stating that “the fundamental respect that they had then underlies the work that you do for American citizens today.” He concluded his remarks by highlighting the need for an agency devoted to protecting privacy and that the work done by the authors is providing that foundation.

Senator Peter Welch (D-VT)

Following Senator Welch’s keynote address, the event shifted to discussions between the winning authors and expert discussants. The 2023 PPPM Digest includes summaries of the papers and more information about the authors.

Professor Emily Black (Barnard College, Columbia University) kicked off the first discussion of the night with Michael Akinwumi (Chief Responsible AI Officer at the National Fair Housing Alliance) by talking about her paper, Less Discriminatory Algorithms, co-written with Logan Koepke (Upturn), Pauline Kim (Washington University School of Law), Solon Barocas (Microsoft Research), and Mingwei Hsu (Upturn). Their paper analyzes how entities that use algorithmic systems in traditional civil rights domains like housing, employment, and credit should have a duty to search for and implement less discriminatory algorithms (LDAs). During her conversation, Professor Black discussed model multiplicity and argued that businesses should have an onus to proactively search for less discriminatory alternatives. They also discussed the reframing of the industry approach, what regulatory guidance could look like, and how this aligns with President Biden’s “Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence.”

Michael Akinwumi and Professor Emily Black

Next, Claire McKay Bowen (Urban Institute) and Joshua Snoke (RAND Corporation) discussed their paper, Do No Harm Guide: Applying Equity Awareness in Data Privacy Methods, with Miranda Bogen (Director, AI Governance Lab at the Center for Democracy & Technology). Their paper uses interviews with experts on privacy-preserving methods and data sharing to highlight equity-focused work in statistical data privacy. Their conversation explored questions such as “What are privacy utility trade-offs?”, “What do we mean by data representation?” and highlighted real-world examples of equity issues surrounding data access, such as informing prospective transgender students about campus demographics versus protecting current transgender students at law schools. They also touched on aspirational workflows, including tools and recommendations. Attendees asked questions regarding data cooperatives, census data, and more.

Miranda Bogen, Claire McKay Bowen, and Joshua Snoke

Brenda Leong (Luminos.Law) and Albert Fox Cahn (Surveillance Technology Oversight Project) discussed their paper AI Audits: Who, When, How…Or Even If? with Edgar Rivas (Senior Policy Advisor for U.S. Senator John Hickenlooper (D-CO)). Co-written with Evan Selinger (Rochester Institute of Technology), their paper explains why AI audits are often regarded as essential tools within an overall responsible governance system while also discussing why some civil rights experts are skeptical that audits can fully address all AI system risks. During the conversation, Ms. Leong stated that AI audits need to be developed and analyzed because they will be included in governance and legislation. Mr. Cahn raised important questions, such as whether we have the accountability necessary for AI audits already being deployed and whether audit elements voluntarily provided in the private sector can translate to public compliance. The co-authors also discussed New York City’s 2023 audit law (used as a case study in their paper), commenting that the law’s standards and broad application potentially open the door for discussion of key issues, including those relating to discriminatory models.

Brenda Leong and Albert Fox Cahn

During the next panel, Professor Daniel Solove (George Washington University Law School) discussed his paper Data Is What Data Does: Regulating Based on Harm and Risk Instead of Sensitive Data with Didier Barjon (Legislative Assistant for U.S. Senate Majority Leader Charles Schumer (D-NY)). His paper argues that heightened protection for sensitive data does not work because the sensitive data categories are vague and lack a coherent theory for identifying them. In their discussion, Professor Solove noted that we can still infer sensitive information through non-sensitive data, making it difficult to know which combinations can become sensitive data and which don’t. He then stated that to be effective, privacy law must focus on harm and risk rather than the nature of personal data: “Categories are not proxies—[we] need to do the hard work of figuring out the harm and risk around data.”

Didier Barjon and Professor Daniel Solove

Professor Solove and Mr. Barjon were then joined on stage by Hideyuki Matsumi (Vrije Universiteit Brussel) to discuss Professor Solove’s and Mr. Matsumi’s co-authored paper, The Prediction Society: Algorithms and the Problems of Forecasting the Future. Their paper raises concerns about the rise of algorithmic predictions and how they not only forecast the future but also have the power to create and control it. Mr. Barjon asked the authors about the “self-fulfilling prophecy” problem discussed in the paper, and Mr. Matsumi explained that this refers to the idea that people perform better if there’s a higher expectation to do so and vice versa. Therefore, even if an algorithmic prediction is inaccurate, individuals susceptible to or prone to believe the prediction will be impacted, and the prediction will be made true, leading to what the authors called a “doom cycle.” The authors advocated for a risk-based approach to predictions and stated that we should analyze and think deeply about predictions rather than ban them altogether.

Hideyuki Matsumi and Professor Daniel Solove

In the evening’s final presentation, Robin Staab and Mislav Balunovic (ETH Zurich SRI Lab) discussed their paper, Beyond Memorization: Violating Privacy Via Inference with Large Language Models, with Professor Alicia Solow-Niederman (George Washington University Law School). Their paper, co-written with Mark Vero and Professor Martin Vechev (ETH Zurich SRI Lab), examined the capabilities of pre-trained large language models (LLMs) to infer personal attributes of a person from text on the internet and raised concerns about the ineffectiveness of protecting user privacy from LLM interferences. Professor Solow-Niederman asked the authors about the provider intervention suggested in the paper that could potentially align models to be privacy-protected. The authors noted that there are limitations to what providers can do and that there is a tradeoff between having better inferences across all areas or having limited inferences but better privacy. They also stated that we need to be aware that alignment is not the solution and that the way to move forward is for users to be aware that such inferences can happen and have the tools to write text from which inferences cannot be made.

Professor Alicia Solow-Niederman, Robin Staab, and Mislav Balunovic

As panel discussions ended, FPF SVP for Policy John Verdi closed the event by thanking the audience, winning authors, judges, discussants, the FPF Events team, and FPF’s Jordan Francis for making the event happen.

John Verdi

Thank you to Senator Peter Welch and Honorary Co-Hosts Congresswoman Diana DeGette (D-CO-1) and Senator Ed Markey (D-MA), Co-Chairs of the Congressional Privacy Caucus. We would also like to thank our winning authors, expert discussants, those who submitted papers, and event attendees for their thought-provoking work and support.

Later that week, FPF honored the winners of internationally focused papers in a virtual conversation hosted by FPF Global Policy Manager Bianca-Ioana Marcu, with FPF CEO Jules Polonetsky providing opening remarks.

The first discussion was moderated by FPF Policy Counsel Maria Badillo with authors Luca Belli (Fundação Getulio Vargas (FGV) Law School) and Pablo Palazzi (Allende & Brea) on their paper, Towards a Latin American Model of Adequacy for the International Transfer of Personal Data co-authored by Dr. Ana Brian Nougrères (University of Montevideo), Jonathan Mendoza Iserte (National Institute of Transparency, Access to Information and Personal Data Protection), and Nelson Remolina Angarita (Law School of the University of the Andes). The conversation focused on diverse mechanisms for data transfers, such as the adequacy system, and the relevance and necessity of having a regional model of adequacy, including the benefits of having a Latin American model. The authors also dive into the role of the Ibero-American Data Protection Network.

Maria Badillo, Pablo A. Palazzi, and Professor Luca Belli

The second discussion of the event was led by FPF Senior Fellow and Considerati Managing Director Cornelia Kutterer with author Catherine Jasserand (University of Groningen) on her winning paper Experiments with Facial Recognition Technologies in Public Spaces: In Search of an EU Governance Framework. Their conversation highlighted the experiments and trials in the paper as well as the legality of facial recognition technologies under data protection law. The second portion of the discussion focused on the EU AI Act and how it relates to the relevancy and applicability of the laws highlighted in the paper.

Cornelia Kutterer and Professor Catherine Jasserand

We hope to see you next year at the 15th Annual Privacy Papers for Policymakers!