When is a Biometric No Longer a Biometric?

In October 2021, the White House Office of Science and Technology (OSTP) published a Request for Information (RFI) regarding uses, harms, and recommendations for biometric technologies. Over 130 entities responded to the RFI, including advocacy organizations, scientists, experts in healthcare, lawyers, and technology companies. While most commenters agreed on core concepts of biometric technologies used to identify or verify identity (with differences in how to address it in policy), there was clear division as to what extent the law should apply to emerging technologies used for physical detection and characterization (such as skin cancer detection or diagnostic tools). These comments reveal that there is no general consensus on what “biometrics” should entail and thus what the applicable scope of law should be.

Using the OSTP comments as a reference point, this briefing explores four main points regarding the scope of “biometrics” as it relates to emerging technologies that rely on human body-based data but is not used to identify or track:

- Biometrics technologies range widely in purpose and risk profile (including 1:1, 1:Many, tracking, characterization, and detection).

- Current U.S. biometric privacy laws and regulatory guidance largely limit the scope of “biometrics” to identification and verification (with the exception of Texas and a few caveats surrounding ongoing litigation in Illinois).

- Many academics and civil society members argue the existing framework should be expanded to other emerging technologies such as detection and characterization tools because these uses can still pose risks to individuals, particularly in exacerbating discrimination against marginalized communities.

- Many in industry disagree with expanding the definitional scope, and posit that identification/verification technologies pose very different types and levels of risks from detection and characterization, and thus there should be a regulatory distinction.

As policymakers consider how to regulate biometric data, they should understand the different technologies, risks associated with each, existing laws and frameworks, and take into account the policy arguments for how the law should interact with these emerging technologies (such as “characterization” and “detection”) that rely on an individual’s physical data but are not used to identify or track.

1. Types of Biometric and Body-Based Technologies

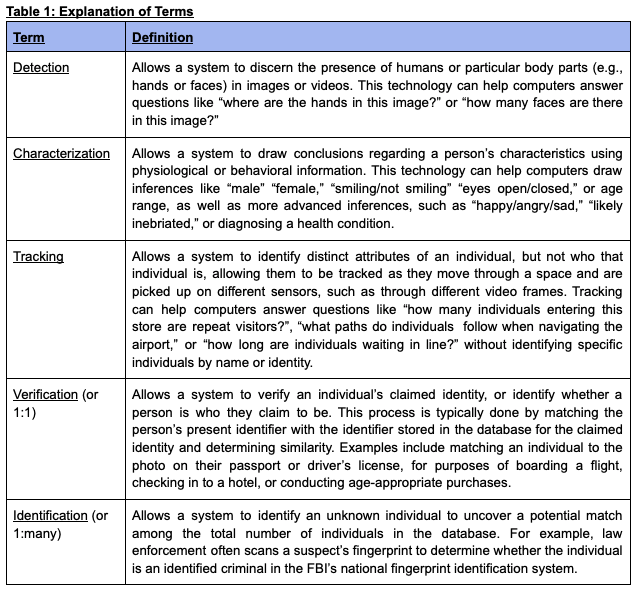

In Future of Privacy Forum’s 2018 Privacy Principles for Facial Recognition Technology in Commercial Applications, FPF distinguished between five types of facial recognition technologies: detection, characterization, unique persistent tracking, 1:1 verification, and 1:many identification. The same distinctions apply readily to the broader world of biometric data. Most notably, unlike identification and verification, detection and characterization technologies are developed to detect or infer bodily characteristics or behavior but the subject is not identifiable (meaning PII is typically not retained), unless the user actively links the data to a known identity or unique profile. A brief explanation of terms and distinctions are provided in Table 1.

In considering these distinctions, the OSTP responses broadly showcased two contrasting frameworks for which technologies should fall under the scope of “biometrics”:

- Biometric data should be defined by the source of the data itself– i.e. it’s biometrics because it’s data derived from an individual’s body–therefore, any processing activity dependent on data from an individual’s body, including detection and characterization, should be regulated under biometric privacy laws.

- Biometric data should be defined by the processing activity–i.e. it’s biometrics because it’s unique physical data used to identify or verify individuals–therefore only those uses should be regulated under biometric privacy laws. Since detection and characterization are not used for identification, they should not fall within the scope of the law.

2. “Biometrics” Under Existing Legal Frameworks in the U.S.

Definitions in U.S. state biometric privacy laws and comprehensive data privacy laws largely limit the scope of “biometric information” or “biometric data” to data collected for purposes related to identification, with some exceptions (including Texas, and emerging case law in Illinois) (see Table 2). Biometric data privacy laws in the U.S. were mainly passed to mitigate privacy and security risks associated with individuals’ biometric data since the data is inherently unique and cannot be altered. For example, Section 14/5 in the Illinois Biometric Privacy Act states:

Biometrics are unlike other unique identifiers that are used to access finances or other sensitive information. For example, social security numbers, when compromised, can be changed. Biometrics, however, are biologically unique to the individual; therefore, once compromised, the individual has no recourse, is at heightened risk for identity theft, and is likely to withdraw from biometric-facilitated transactions.

How U.S. policymakers and the courts have thought about the scope of these laws is heavily dependent on the Illinois Biometric Privacy Act (BIPA)–the seminal biometric privacy law in the U.S. Importantly, BIPA contains a private right of action that has allowed courts to decide the boundaries of what technologies should and should not be within the scope of the law. The technologies most targeted under the law include social media photo tagging features, employee timekeeping verification systems, and facial recognition. Thus far, there appears no state or federal court that has conclusively held that BIPA applies to purely detection-based or characterization technology that is not used for identification or verification purposes. Ongoing litigation, however, appears to be raising important questions on whether and when detection and characterization technologies overlap with terms used to define biometrics in the law. For example, in Gamboa v. The Procter & Gamble Company, the Northern District of Illinois must decide to what extent “facial geometry” can apply to uses such as the detection of a toothbrush position in your mouth.

| Jurisdiction | Definition |

|---|---|

| Illinois* 740 ILCS 14 | “Biometric information” means any information, regardless of how it is captured, converted, stored, or shared, based on an individual’s biometric identifier used to identify an individual. Biometric information does not include information derived from items or procedures excluded under the definition of biometric identifiers. “Biometric identifier” means a retina or iris scan, fingerprint, voiceprint, or scan of hand or face geometry.* “Biometric identifier” does not include: – writing samples, written signatures, photographs, human biological samples used for valid scientific testing or screening, demographic data, tattoo descriptions, or physical descriptions such as height, weight, hair color, or eye color. – donated organs, tissues, or parts as defined in the Illinois Anatomical Gift Act or blood or serum stored on behalf of recipients or potential recipients of living or cadaveric transplants and obtained or stored by a federally designated organ procurement agency. – biological materials regulated under the Genetic Information Privacy Act. – information captured from a patient in a health care setting or information collected, used, or stored for health care treatment, payment, or operations under the federal Health Insurance Portability and Accountability Act of 1996. – X-ray, roentgen process, computed tomography, MRI, PET scan, mammography, or other image or film of the human anatomy used to diagnose, prognose, or treat an illness or other medical condition or to further validate scientific testing or screening. *The Illinois Biometric Privacy Law defines both “biometric information” and “biometric identifier,” with the substantive requirements of the law applying to both. Some emerging case law is finding that BIPA applies to processing of biometric identifiers even when a specific individual is not being identified but nonetheless is being used for facial recognition software. See, e.g. Monroy v. Shutterfly, Inc., Case No. 16 C 10984 (N.D. Ill. Sep. 15, 2017); In re Facebook Biometric Info. Privacy Litig., 185 F. Supp. 3d 1155 (N.D. Cal. 2016). |

| Washington Wash. Rev. Code Ann. §19.375.020 | “Biometric identifier” means data generated by automatic measurements of an individual’s biological characteristics, such as a fingerprint, voiceprint, eye retinas, irises, or other unique biological patterns or characteristics that is used to identify a specific individual. “Biometric identifier” does not include a physical or digital photograph, video or audio recording or data generated therefrom, or information collected, used, or stored for health care treatment, payment, or operations under the federal health insurance portability and accountability act of 1996. |

| Texas RCW §19.375.020 | “Biometric identifier” means a retina or iris scan, fingerprint, voiceprint, or record of hand or face geometry.” |

| California (“CPRA”) §1798.140(c) | “Biometric information” means an individual’s physiological, biological or behavioral characteristics, including information pertaining to an individual’s deoxyribonucleic acid (DNA), that is used or is intended to be used singly or in combination with each other or with other identifying data, to establish individual identity. Biometric information includes, but is not limited to, imagery of the iris, retina, fingerprint, face, hand, palm, vein patterns, and voice recordings, from which an identifier template, such as a faceprint, a minutiae template, or a voiceprint, can be extracted, and keystroke patterns or rhythms, gait patterns or rhythms, and sleep, health, or exercise data that contain identifying information. |

| Virginia §59.1-571 | “Biometric data” means data generated by automatic measurements of an individual’s biological characteristics, such as a fingerprint, voiceprint, eye retinas, irises, or other unique biological patterns or characteristics that is used to identify a specific individual. “Biometric data” does not include a physical or digital photograph, a video or audio recording or data generated therefrom, or information collected, used, or stored for health care treatment, payment, or operations under HIPAA. |

| Utah S.B.0227 | “Biometric data” includes data described in Subsection (6)(a) that are generated by automatic measurements of an individual’s fingerprint, voiceprint, eye retinas, irises, or any other unique biological pattern or characteristic that is used to identify a specific individual. “Biometric data” does not include: (i) a physical or digital photograph; (ii) a video or audio recording; (iii) data generated from an item described in Subsection (6)(c)(i) or (ii); (iv) information captured from a patient in a health care setting; or (v) information collected, used, or stored for treatment, payment, or health care operations as those terms are defined in 45 C.F.R. Parts 160, 162, and 164. |

| Connecticut SB6 | “Biometric data” means data generated by automatic measurements of an individual’s biological characteristics, such as a fingerprint, a voiceprint, eye retinas, irises or other unique biological patterns or characteristics that are used to identify a specific individual. “Biometric data” does not include (A) a digital or physical photograph, (B) an audio or video recording, or (C) any data generated from a digital or physical photograph, or an audio or video recording, unless such data is generated to identify a specific individual |

3. Arguments for Expanding Biometric Regulations to Include Detection and Characterization Technologies

The OSTP RFI demonstrates how the scope of biometric privacy laws could be expanded beyond identification-based technologies. The RFI used “biometric information” to refer to “any measurements or derived data of an individual’s physical (e.g., DNA, fingerprints, face or retina scans) and behavioral (e.g., gestures, gait, voice) characteristics.” The OSTP further noted that “we are especially interested in the use of biometric information for: Recognition…and Inference of cognitive and/or emotion state.”

Many respondents, largely from civil society and academia, discussed the risks of technologies that collected and tracked an individual’s body-based data for detection, characterization, and other inferences. Specific use cases identified in the responses included: counting the number of customers in a store (by detecting and counting faces in video footage), diagnosing a skin condition, tools used to infer human emotion, disposition, character, intent (EDCI), eye and head movement tracking, and vocal biomarkers (or medical deductions based on inflections in your voice).

In all of these examples, respondents emphasized that bodily detection and characterization technologies carry significant risks of inaccuracy and discrimination. Even if not used to identify or track, respondents argued that detection and characterization technologies are still harmful and unreliable, largely because they are built upon unverified assumptions and pseudoscience. For instance, respondents noted that:

- EDCI tooling (using facial characterization to infer emotional or mental states) is not reliable because there is no reliable or universal relationship between emotional states and observable biological activity.

- Video analytics that claim to detect lies or deception through eye tracking are unreliable because the link between high-level mental states such as “truthfulness” and low-level, involuntary external behavior is too ambiguous and unreliable to be of use.

- The real-world performance of models used to diagnose patients based on speech and language (vocal biomarkers) are not properly validated.

As a result, many experts argued that these systems exacerbate discrimination and existing inequalities against protected classes, most notably people of color, women, and the disabled. For example, Dr. Joy Buolamwani, a leading AI ethicist, points to her peer-reviewed MIT study demonstrating how commercial facial analysis systems used to detect skin cancer exhibit lower rates of accuracy for darker-skinned females. As a result, women of color have a higher rate of misdiagnosis. In another example, the Center for Democracy and Technology notes, in examining the use of facial analysis for diagnoses:

“. . . facial analysis has been used to diagnose autism by analyzing facial expressions and repetitive behaviors, but these attributes tend to be evaluated relative to how they present in a white autistic person assigned male at birth and identifying as masculine. Attributes related to neurodivergence vary considerably because racial and gender norms cause other forms of marginalization to affect how the same disabilities present, are perceived, and are masked. Therefore, people of color, transgender and gender nonconforming people, and girls and women are less likely to receive accurate diagnoses particularly for cognitive and mental health disabilities…” (citations omitted).

Accordingly, many respondents recommended expanding the existing biometrics framework to a broader set of technologies that collect and track any data derived from the body, including detection and characterization, because they similarly carry risk that could be mitigated by federal guidelines and regulation. Specifically, some of the policy proposals set forth in RFI comments included:

- Banning or severely limiting the government’s use of biometric technologies, including detection and characterization using an individual’s physical features;

- Prohibiting all use of of biometric data collection without express consent; and

- Requiring private entities collecting any form of biometric data to demonstrate that the system does not disparately impact marginalized communities through rigorous testing, auditing, and oversight.

4. Arguments Against Expanding Biometric Regulations to Equally Apply to Detection and Characterization Technologies

Many respondents from the technology and business communities argued against the OSTP’s broad scope of “biometrics” to include all forms of bodily measurement as inconsistent with existing laws and scientific standards. Accenture, SIIA, and MITRE cited definitions set forth by the National Institute of Science and Technology (NIST), National Center for Biotechnology Information (NCBI), Federal Bureau of Investigation, and Department of Homeland Security, as well as the U.S. state biometric and comprehensive privacy laws, which all limit “biometrics” to recognition or identification of an individual. As a result, most businesses have relied on this framework, and the guidance set forth by these entities, in developing their internal practices and procedures for processing such data.

Respondents also argued that there are distinct uses, processes, and risk-profiles between systems used for identification and verification versus those used for detection and characterization. Where identification, verification, and tracking technologies are directly tied to an individual’s identity or unique profile, and thus carry specific privacy concerns, detection and characterization technologies do not necessarily carry such risks when not employed against known individuals. Therefore, many respondents argued, implementing a horizontal standard for biometrics, such as blanket bans, that conflates all technologies may cause unintended consequences, largely by hindering the progress of low-risk and valuable applications that are relied upon in our society. Some examples presented of lower-risk use cases for bodily detection and characterization include:

- Face detection for a camera to provide auto-focus features;

- Skin characterization to diagnose skin conditions from images;

- Video analytics to determine the number of humans in a store to abide by COVID restrictions.

- Assistive technology, such as speech transcription tools or auto-captioning;

With these technologies, industry experts emphasize that applications do not necessarily identify individuals, but process physical characteristics to deliver beneficial products or services. Though many companies acknowledged risks related to accuracy, bias, and discrimination against marginalized populations, they argued that such risks should be addressed outside the framework of “biometrics” – instead addressed through a risk-based approach that distinguishes the types, uses, and levels of risk with different technologies. Because identifying-technologies often pose a higher risk of harm, respondents noted that it is appropriate that they incorporate more rigorous safeguards, however, those safeguards may not be equally necessary or valuable to other technologies.

Some examples of policy proposals provided by respondents that tailor the regulation to the specific use case or technology include:

- Requiring that biometric systems operate with certain allowable limits of demographic differentials for the specified use-case(s);

- Requiring that automated decisions be human adjudicated for certain intended use case(s);

- Establishing heightened requirements for law enforcement use of biometric information used for identification purposes;

What’s Next?

At the end of the day, all technologies relying on data derived from our bodies carry some form of risk. Bodily characterization and detection technologies may not always carry privacy risks, but may nonetheless lead to invasive forms of profiling or discrimination that could be addressed through general AI regulations – such as requirements to conduct impact assessments or independent audits. Meanwhile, disagreements over definitions of “biometrics” may be overshadowing the key policy questions to be addressed, such as how to differentiate and mitigate current harms caused by unfair or inaccurate profiling.

Read the OSTP Summary of the Responses here.