The FPF Center for Artificial Intelligence: Navigating AI Policy, Regulation, and Governance

The rapid deployment of Artificial Intelligence for consumer, enterprise, and government uses has created challenges for policymakers, compliance experts, and regulators. AI policy stakeholders are seeking sophisticated, practical policy information and analysis.

The rapid deployment of Artificial Intelligence for consumer, enterprise, and government uses has created challenges for policymakers, compliance experts, and regulators. AI policy stakeholders are seeking sophisticated, practical policy information and analysis.

This is where the FPF Center for Artificial Intelligence comes in, expanding FPF’s role as the leading pragmatic and trusted voice for those who seek impartial, practical analysis of the latest challenges for AI-related regulation, compliance, and ethical use.

At the FPF Center for Artificial Intelligence, we help policymakers and privacy experts at organizations, civil society, and academics navigate AI policy and governance. The Center is supported by a Leadership Council of experts from around the globe. The Council consists of members from industry, academia, civil society, and current and former policymakers.

FPF has a long history of AI-related and emerging technology policy work that has focused on data, privacy, and the responsible use of technology to mitigate harms. From FPF’s presentation to global privacy regulators about emerging AI technologies and risks in 2017 to our briefing for US Congressional members detailing the risks and mitigation strategies for AI-powered workplace tech in 2023, FPF has helped policymakers around the world better understand AI risks and opportunities while equipping data, privacy and AI experts with the information they need to develop and deploy AI responsibly in their organizations.

In 2024, FPF received a grant from the National Science Foundation (NSF) to advance the Whitehouse Executive Order in Artificial Intelligence to support the use of Privacy Enhancing Technologies (PETs) by government agencies and the private sector by advancing legal certainty, standardization, and equitable uses. FPF is also a member of the U.S. AI Safety Institute at the National Institute for Standards and Technology (NIST) where it focuses on assessing the policy implications of the changing nature of artificial intelligence.

Areas of work within the FPF Center for Artificial Intelligence include:

- Legislative Comparison

- Responsible AI Governance

- AI Policy by Sector

- AI Assessments & Analyses

- Novel AI Policy Issues

- AI and Privacy Enhancing Technologies

FPF’s new Center for Artificial Intelligence will be supported by a Leadership Council of leading experts from around the globe. The Council will consist of members from industry, academia, civil society, and current and former policymakers.

FPF Center for AI Leadership Council

The FPF Center for Artificial Intelligence will be supported by a Leadership Council of leading experts from around the globe. The Council will consist of members from industry, academia, civil society, and current and former policymakers.

We are delighted to announce the founding Leadership Council members:

- Estela Aranha, Member of the United Nations High-level Advisory Body on AI; Former State Secretary for Digital Rights, Ministry of Justice and Public Security, Federal Government of Brazil

- Jocelyn Aqua, Principal, Data, Risk, Privacy and AI Governance, PricewaterhouseCoopers LLP

- John Bailey, Nonresident Senior Fellow, American Enterprise Institute

- Lori Baker, Vice President, Data Protection & Regulatory Compliance, Dubai International Financial Centre Authority (DPA)

- Cari Benn, Assistant Chief Privacy Officer, Microsoft Corporation

- Andrew Bloom, Vice President & Chief Privacy Officer, McGraw Hill

- Kate Charlet, Head of Global Privacy, Safety, and Security Policy, Google

- Prof. Simon Chesterman, David Marshall Professor of Law & Vice Provost, National University of Singapore; Principal Researcher, Office of the UNSG’s Envoy on Technology, High-Level Advisory Body on AI

- Barbara Cosgrove, Vice President, Chief Privacy Officer, Workday

- Jo Ann Davaris, Vice President, Global Privacy, Booking Holdings Inc.

- Elizabeth Denham, Chief Policy Strategist, Information Accountability Foundation, Former UK ICO Commissioner and British Columbia Privacy Commissioner

- Lydia F. de la Torre, Senior Lecturer at University of California, Davis; Founder, Golden Data Law, PBC; Former California Privacy Protection Agency Board Member

- Leigh Feldman, SVP, Chief Privacy Officer, Visa Inc.

- Lindsey Finch, Executive Vice President, Global Privacy & Product Legal, Salesforce

- Harvey Jang, Vice President, Chief Privacy Officer, Cisco Systems, Inc.

- Lisa Kohn, Director of Public Policy, Amazon

- Emerald de Leeuw-Goggin, Global Head of AI Governance & Privacy, Logitech

- Caroline Louveaux, Chief Privacy Officer, MasterCard

- Ewa Luger, Professor of human-data interaction, University of Edinburgh; Co-Director, Bridging Responsible AI Divides (BRAID)

- Dr. Gianclaudio Malgieri, Associate Professor of Law & Technology at eLaw, University of Leiden

- State Senator James Maroney, Connecticut

- Christina Montgomery, Chief Privacy & Trust Officer, AI Ethics Board Chair, IBM

- Carolyn Pfeiffer, Senior Director, Privacy, AI & Ethics and DSSPE Operations, Johnson & Johnson Innovative Medicine

- Ben Rossen, Associate General Counsel, AI Policy & Regulation, OpenAI

- Crystal Rugege, Managing Director, Centre for the Fourth Industrial Revolution Rwanda

- Guido Scorza, Member, The Italian Data Protection Authority

- Nubiaa Shabaka, Global Chief Privacy Officer and Chief Cyber Legal Officer, Adobe, Inc.

- Rob Sherman, Vice President and Deputy Chief Privacy Officer for Policy, Meta

- Dr. Anna Zeiter, Vice President & Chief Privacy Officer, Privacy, Data & AI Responsibility, eBay

- Yeong Zee Kin, Chief Executive of Singapore Academy of Law and former Assistant Chief Executive (Data Innovation and Protection Group), Infocomm Media Development Authority of Singapore

For more information on the FPF Center for AI email [email protected]

Featured

FPF Partner in algoaware Project Releases State of the Art Report

algoaware has released the first public version of the State of the Art Report, open for peer review. The report includes a comprehensive explanation of the key concepts of algorithmic decision-making, a summary of the academic debate and its most pressing issues, as well as an overview of the most recent and relevant initiatives and policy actions of the civil society as well as of national and international governing bodies.

Calls for Regulation on Facial Recognition Technology

We look forward to working with Microsoft, others in industry, and policymakers to “create policies, processes, and tools” to make responsible use of Facial Recognition technology a reality.

Nothing to Hide: Tools for Talking (and Listening) About Data Privacy for Integrated Data Systems

Data-driven and evidence-based social policy innovation can help governments serve communities better, smarter, and faster. Integrated Data Systems (IDS) use data that government agencies routinely collect in the normal course of delivering public services to shape local policy and practice. They can use data to evaluate the effectiveness of new initiatives or bridge gaps between public services and community providers.

The Privacy Expert's Guide to AI And Machine Learning

Today, FPF announces the release of The Privacy Expert’s Guide to AI and Machine Learning. This guide explains the technological basics of AI and ML systems at a level of understanding useful for non-programmers, and addresses certain privacy challenges associated with the implementation of new and existing ML-based products and services.

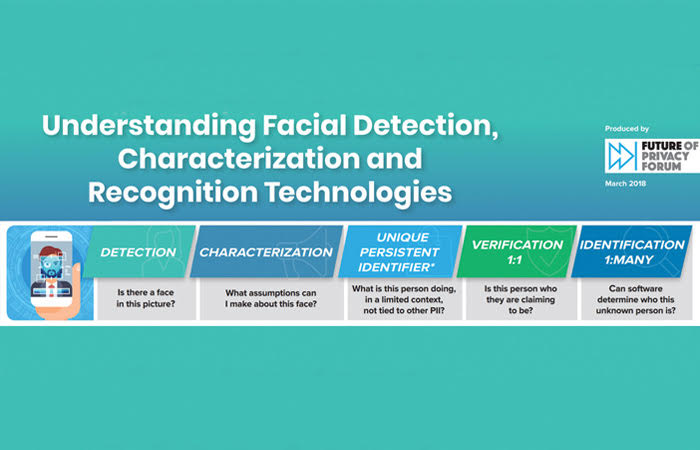

FPF Releases Understanding Facial Detection, Characterization, and Recognition Technologies and Privacy Principles for Facial Recognition Technology in Commercial Applications

These resources will help businesses and policymakers better understand and evaluate the growing use of face-based biometric technology systems when used for consumer applications. Facial recognition technology can help users organize and label photos, improve online services for visually impaired users, and help stores and stadiums better serve customers. At the same time, the technology often involves the collection and use of sensitive biometric data, requiring careful assessment of the data protection issues raised. Understanding the technology and building trust are necessary to maximize the benefits and minimize the risks.

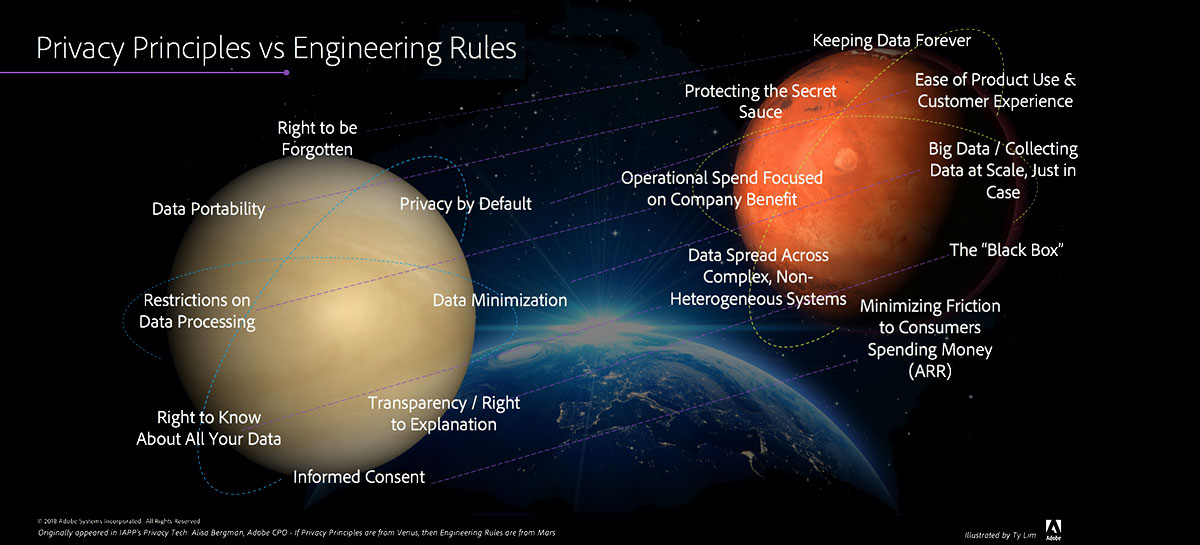

If privacy principles are from Venus, then engineering rules are from Mars

FPF Advisory Board member, Alisa Bergman, Vice President, Chief Privacy Officer at

Adobe Systems, recently wrote an article in the IAPP Tech Privacy Advisor that we think is very useful. The article started from a presentation Bergman did for Adobe engineers.

FPF Launches AI and Machine Learning Working Group and Releases New AI Resource Guides

FPF has convened a leading group of its members to consider priority areas for technologies and companies to address ML privacy and ethics concerns. Our AI and Machine Learning Working Group, composed of FPF member companies with an interest in AI and Machine Learning privacy and data management challenges, meets monthly to discuss various relevant issues regarding new updates, hear from experts regarding AI in the EU and under GDPR, the occurrence and defense against bias, and other timely topics.

Policy Brief: European Commission’s Strategy for AI, explained

The European Commission published a Communication on “Artificial Intelligence for Europe” on April 24th 2018. It highlights the transformative nature of AI technology for the world and it calls for the EU to lead the way in the approach of developing AI on a fundamental rights framework. AI for good and for all is the motto the Commission proposes. The Communication could be summed up as announcing concrete funding for research projects, clear social goals and more thinking about everything else.

FPF Publishes Report Supporting Stakeholder Engagement and Communications for Researchers and Practitioners Working to Advance Administrative Data Research

The ADRF Network is an evolving grassroots effort among researchers and organizations who are seeking to collaborate around improving access to and promoting the ethical use of administrative data in social science research. As supporters of evidence-based policymaking and research, FPF has been an integral part of the Network since its launch and has chaired the network’s Data Privacy and Security Working Group since November 2017.

Beyond Explainability: A Practical Guide to Managing Risk in Machine Learning Models

Beyond Explainability aims to provide a template for effectively managing this risk in practice, with the goal of providing lawyers, compliance personnel, data scientists, and engineers a framework to safely create, deploy, and maintain ML, and to enable effective communication between these distinct organizational perspectives.