Showing results for planfully call 614 647 0039 electrical service planfully call 800 387 0073 614 647 0039 1-800-387-0073 call 614 647 0039 call 1 0073 614 647 0039 614 647 0039 800 387 0073 614 647 0039

Annual DC Privacy Forum: Convening Top Voices in Governance in the Digital Age

[…] up the crowd for “The Big Debates.” This event’s debate-style format allowed the audience to participate via real-time voting before, during, and after the debaters’ presentations. Debate 1: “Current U.S. Law Provides Effective Regulation for AI” Will Rinehart, Senior Fellow at the American Enterprise Institute, argued in favor of the statement, stating that existing […]

10. Navigating the Evolving Ad Tech Landscape Brief Sheet

[…] data minimization paradigm embodied in MODPA, MHMD, and the NYCPA, the collection of data itself is prohibited unless it is deemed necessary for providing a product or service requested by the consumer. In this regard it is closely related to purpose specification. In a substantive model, collection and use is tied to the nature […]

2. State & Federal Privacy Leg & Reg Brief Sheet

[…] Country ● Health: A View from DC: New York Readies to Pass an Extraordinary Health Privacy Law ● Location: FPF Mobility & Location March 2025 Working Group Call Notes ● FPF Report: Anatomy of State Comprehensive Privacy Law State & Federal Privacy Legislation and Regulation Discussion Lead: Jordan Francis and Keir Lamont CONTINUED: 6. […]

Brazil’s ANPD Preliminary Study on Generative AI highlights the dual nature of data protection law: balancing rights with technological innovation

[…] hospital protocols and support decision-making in the healthcare sector. Finally, Banco do Brasil is developing a Large Language Model (LLM) to assist employees in providing better customer service experiences. The study also highlights the increasing popularity of commercially available generative AI systems such as OpenAI’s ChatGPT and Google’s Gemini among Brazilian users. In this […]

Framework for the Future- Reviewing Data Privacy in Today’s Financial System

[…] Act), and children (Children’s Online Privacy Protection Act). Other privacy protection laws relate to topics like marketing, such as the CAN -SPAM Act and do -not – call requirements. U.S. laws can give federal agencies power to issue supporting regulations, such as the Federal Trade Commission for CAN -SPAM or the Consumer Financial Protection […]

FPF Unveils Paper on State Data Minimization Trends

[…] which personal data can be collected, used, or shared, typically requiring some connection between the personal data and the provision or maintenance of a requested product or service. This white paper explores this ongoing trend towards substantive data minimization, with a focus on the unresolved questions and policy implications of this new language. Read […]

Vermont and Nebraska: Diverging Experiments in State Age-Appropriate Design Codes

[…] Vermont’s default settings approach to safer design, Nebraska requires covered businesses to develop various tools for minors. In some instances, these tools overlap with the default settings called for in Vermont and are just a different statutory approach of arriving at the same goal, such as tools for restricting the collection of geolocation data […]

2025 FPF Working Groups

[…] details on how to access your new account. If you have any questions or issues accessing your Portal profile, please email [email protected] . Ad Practices – Monthly call on the first Thursday of the month at 12pm ET ● 600+ privacy leaders interested in learning about new and existing ad technologies. ● Shares news, […]

FPF-AnnualReport2023-1

[…] of the Union and the public continued to advocate for more safety and privacy protections on social media, 2023 saw federal, and state policymakers react to the call. In response, FPF’s Youth & Education Privacy team provided comments to the National Telecommunications and Information Administration on Kids Online Health and Safety, as well as […]

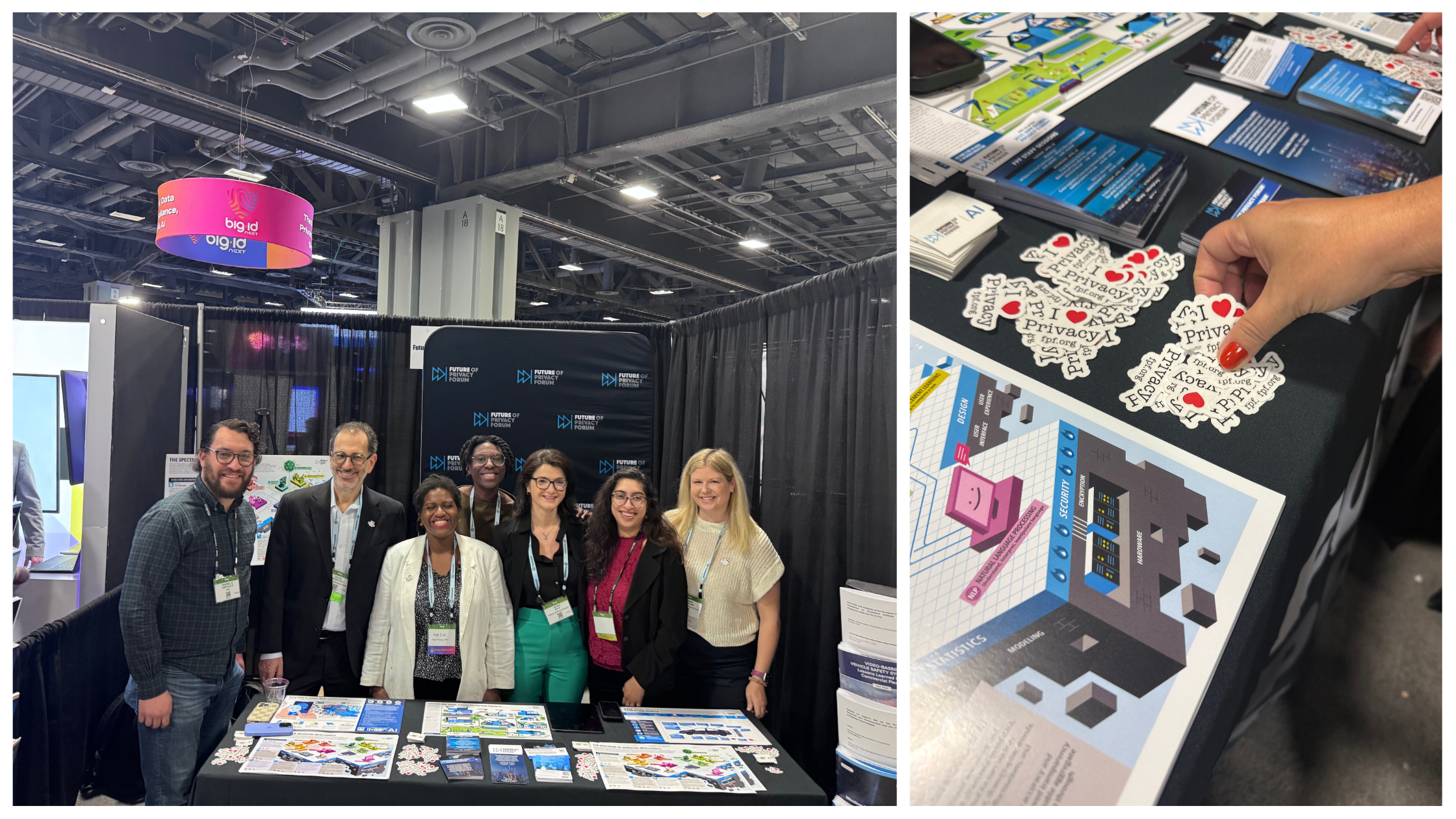

FPF Experts Take The Stage at the 2025 IAPP Global Privacy Summit

[…] FPF.org for all our reports, publications, and infographics. Follow us on X, LinkedIn, Instagram, and YouTube, and subscribe to our newsletter for the latest. event recap blog template (3) img 0823 (2) 1745437096396 img 0834 ( 1) img 0282 2 event recap blog template (4) img 1735 (1) edited (1) img 1691 (1) edited (1)