FPF Highlights Intersection of AI, Privacy, and Civil Rights in Response to California’s Proposed Employment Regulations

On July 18, the Future of Privacy Forum submitted comments to the California Civil Rights Council (Council) in response to their proposed modifications to the state Fair Employment and Housing Act (FEHA) regarding automated-decision systems (ADS). As one of the first state agencies in the U.S. to advance modernized employment regulations to account for automated-decision […]

FPF Responds to the Federal Election Commission Decision on the use of AI in Political Campaign Advertising

The Federal Election Commission’s (FEC) abandoned rulemaking presented an opportunity to better protect the integrity of elections and campaigns, as well as to preserve and increase public trust in the growing use of AI by candidates and in campaigns. When generative AI is used carefully and responsibly, it can reach different segments of the population […]

Connecting Experts to Make Privacy-Enhancing Tech and AI Work for Everyone

The Future of Privacy Forum (FPF) launched its Research Coordination Network (RCN) for Privacy-Preserving Data Sharing and Analytics on Tuesday, July 9th. Industry experts, policymakers, civil society, and academics met to discuss the possibilities afforded by Privacy Enhancing Technologies (PETs), the inherent regulatory challenges, and how PETs interact with rapidly developing AI systems. FPF experts […]

NEW FPF REPORT: Confidential Computing and Privacy: Policy Implications of Trusted Execution Environments

Written by Judy Wang, FPF Communications Intern Today, the Future of Privacy Forum (FPF) published a paper on confidential computing, a privacy-enhancing technology (PET) that marks a significant shift in the trustworthiness and verifiability of data processing for the use cases it supports, including training and use of AI models. Confidential computing leverages two key […]

A First for AI: A Close Look at The Colorado AI Act

Colorado made history on May 17, 2024 when Governor Polis signed into law the Colorado Artificial Intelligence Act (“CAIA”), the first law in the United States to comprehensively regulate the development and deployment of high-risk artificial intelligence (“AI”) systems. The law will come into effect on February 1, 2026, preceding the March, 2026 effective date […]

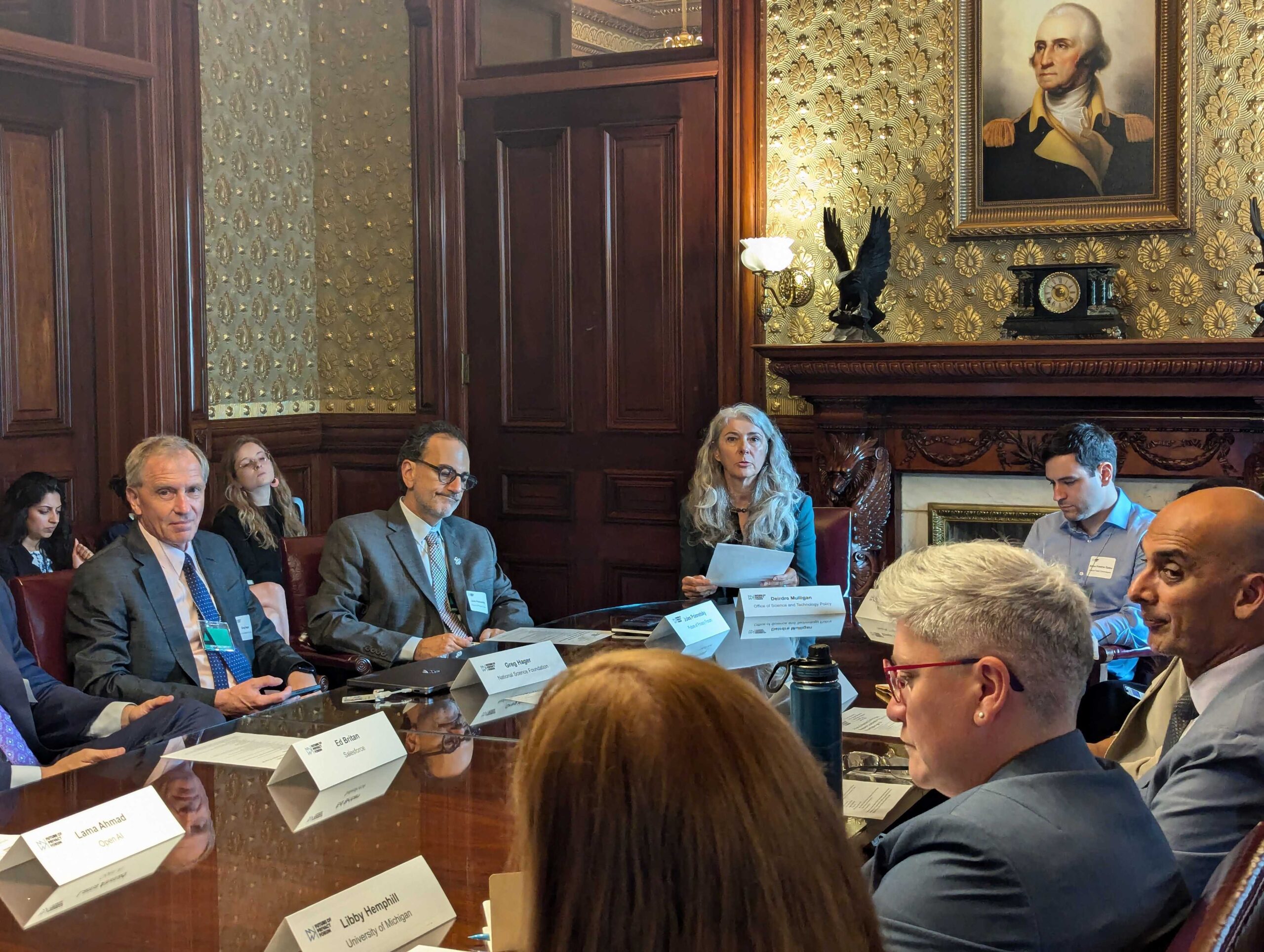

FPF Launches Effort to Advance Privacy-Enhancing Technologies, Convenes Experts, and Meets With White House

FPF’s Research Coordination Network will support developing and deploying Privacy-Enhancing Technologies (PETs) for socially beneficial data sharing and analytics. JULY 9, 2024 — Today, the Future of Privacy Forum (FPF) is launching the Privacy-Enhancing Technologies (PETs) Research Coordination Network (RCN) with a virtual convening of diverse experts alongside a high-level, in-person workshop with key stakeholders […]

Chevron Decision Will Impact Privacy and AI Regulations

The Supreme Court has issued a 6-3 decision in two long-awaited cases – Loper Bright Enterprises v. Raimondo and Relentless, Inc. v. Department of Commerce – overturning the legal doctrine of “Chevron deference.” While the decision will impact a wide range of federal rules, it is particularly salient for ongoing privacy, data protection, and artificial […]

AI Forward: FPF’s Annual DC Privacy Forum Explores Intersection of Privacy and AI

The Future of Privacy Forum (FPF) hosted its inaugural DC Privacy Forum: AI Forward on Wednesday, June 5th. Industry experts, policymakers, civil society, and academics explored the intersection of data, privacy, and AI. In Washington, DC’s southwest Waterfront at the InterContinental, participants joined in person for a full-day program consisting of keynote panels, AI talks, […]

Future of Privacy Forum Launches the FPF Center for Artificial Intelligence

The FPF Center for Artificial Intelligence will serve as a catalyst for AI policy and compliance leadership globally, advancing responsible data and AI practices for public and private stakeholders Today, the Future of Privacy Forum (FPF) launched the FPF Center for Artificial Intelligence, established to better serve policymakers, companies, non-profit organizations, civil society, and academics […]

Colorado Enacts First Comprehensive U.S. Law Governing Artificial Intelligence Systems

On May 17, Governor Polis signed the Colorado AI Act (CAIA) (SB-205) into law, establishing new individual rights and protections with respect to high-risk artificial intelligence systems. Building off the work of existing best practices and prior legislative efforts, the CAIA is the first comprehensive United States law to explicitly establish guardrails against discriminatory outcomes […]