Showing results for planfully call 614 647 0039 electrical service planfully call 800 387 0073 614 647 0039 1-800-387-0073 call 614 647 0039 call 1 0073 614 647 0039 614 647 0039 800 387 0073 614 647 0039

Human,Use,Smartphone,With,Incoming,Call,From,Unknown,Number,,Spam,

human,use,smartphone,with,incoming,call,from,unknown,number,,spam,

33b73133-f14c-4a85-84c3-22c01c839bd2

[…] 1 On September 10, 2024, the FCC published a Notice of Inquiry (“NOI”) on technologies that can alert consumers that they may be interacting with an AI-generated call. 2 Specifically , in the NOI, the FCC seeks comment on tools for detecting, alerting, and blocking AI-generated calls based on real-time phone call content analysis […]

Out, Not Outed: Privacy for Sexual Health, Orientations, and Gender Identities

[…] state-sponsored insurance for gender-affirming treatments, regardless of the patient’s age. New Legal Protections for Health Data When collected inside a health care environment, such as a telehealth service, health data, including SOGI information, is subject to protections under the Health Information Portability and Accountability Act (HIPAA) and related laws or regulations. However, these protections […]

Ed_AI_legal_compliance.pdf_(FInal_OCT24)

[…] generation ofAItools related to questions aboutacadem ic honesty and plagiarism ,2though by the startofthe 2023 schoolyear,K–12 organizations seem ed m ore w illing to adopt3them w hile calling forthe developm entofpolicies4and fram ew orks5,6to safely use them .Issues to be addressed included protecting copyright,addressing the inaccurate “hallucinations”the tools frequently produce,and also com plying w […]

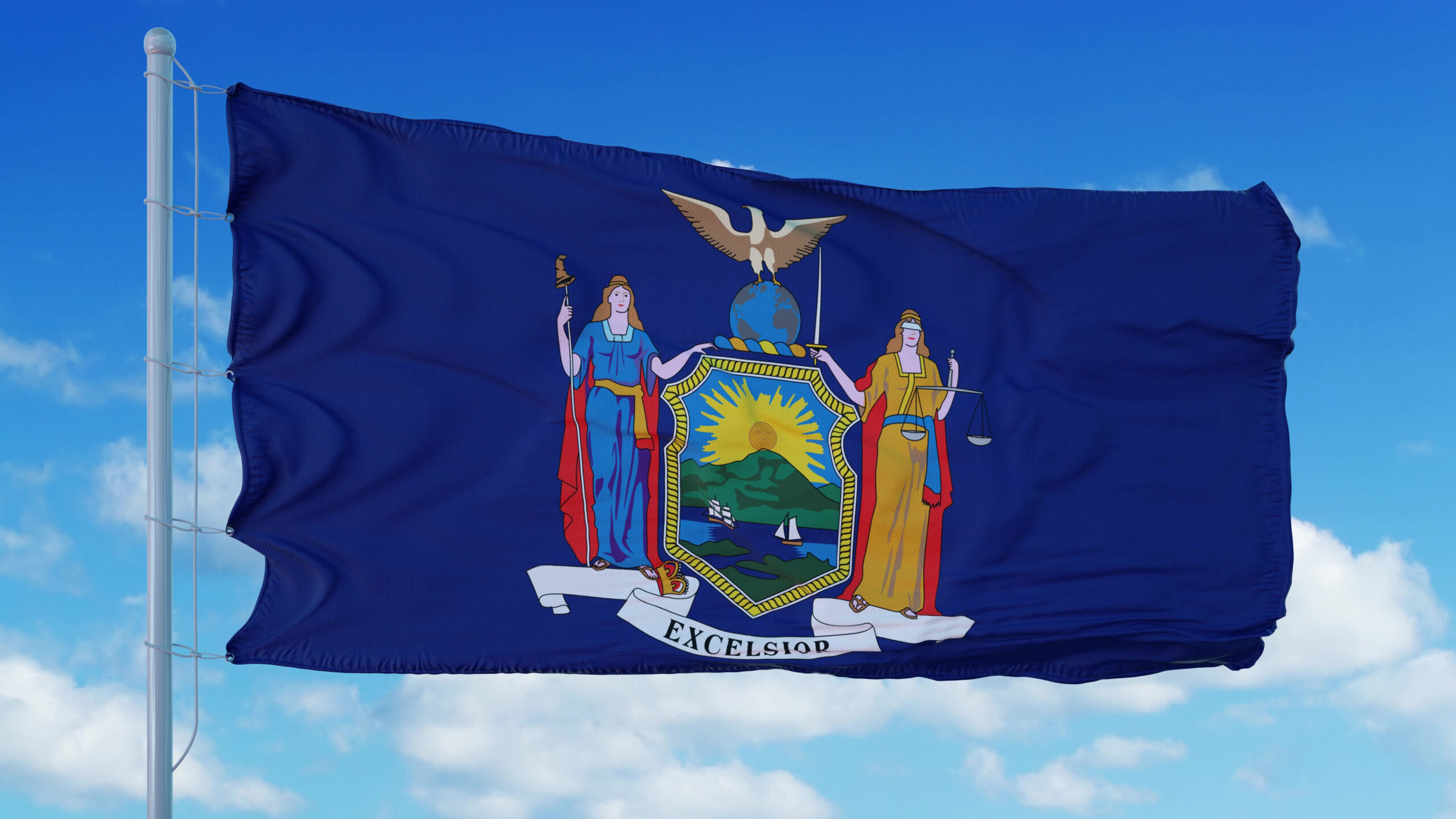

FPF Submits Comments to Inform New York Children’s Privacy Rulemaking Processes

[…] and conspicuously state that the processing for which consent is requested is not strictly necessary and that the minor may decline without preventing continued use of the service; and clearly present an option to refuse to provide consent as the most prominent option. The NYCDPA is also unique in providing for the use of […]

FPF-Sponsorship Prospectus-24-25-R7

[…] benefits for the above Coffee Breaks & Networking Lunch Sponsorship included ❱ Name, logo, and website link on event page, located on FPF website, with a special call-out as the exclusive sponsor FPF DC PRIVACY FORUM FPF SPONSORSHIP PROSPECTUS | 4 Please contact Alyssa Rosinski at [email protected] for more information. FPF ANNUAL […]

FPF Sponsorship Prospectus 2024 -2025 DC Privacy Forum

[…] located on FPF website ❱ Official recognition of sponsor during Opening Remarks EXCLUSIVE FORUM SPONSOR • $12,000 ❱ All benefits for the above Coffee Breaks & Networking Lunch Sponsorship included ❱ Name, logo, and website link on event page, located on FPF website, with a special call-out as the exclusive sponsor FPF DC PRIVACY FORUM

FPF-Sponsorship Prospectus-24-25-R7 (1)

[…] benefits for the above Coffee Breaks & Networking Lunch Sponsorship included ❱ Name, logo, and website link on event page, located on FPF website, with a special call-out as the exclusive sponsor FPF DC PRIVACY FORUM FPF SPONSORSHIP PROSPECTUS | 4 Please contact Alyssa Rosinski at [email protected] for more information. FPF ANNUAL […]

Data Clean Rooms: A Taxonomy & Technical Primer Discussion Draft

1970-01-01 01/01/1970 January 1, 70

FPF Data Clean Rooms Discussion Sept 2024

[…] the product. All personnel and equipment entering a silicon fabrication facility must ensure they are not contaminating the facility. To that end, personnel wear cleanroom suits, also called “bunny suitsâ€, which ensure no outside contaminant affects the delicate manufacturing inside the facility. Thus, “clean room†originally referred to a place where everything was clean, […]