Showing results for appi2020 2011va 2011va api documentation appi2020 2011va

FPF Perspective: Limit Law Enforcement Access to Genetic Datasets

Today, researchers published a paper detailing how governments can use public genetic databases to identify criminal suspects. These activities raise real questions about when it’s appropriate for law enforcement to analyze genetic information, and how best to protect individuals whose genetic data has been analyzed as part of a commercial service, but who are not accused of a crime.

Mobile Platforms Address Data Privacy with 2018 Updates (iOS 12, Mojave, & Android P)

[…] same through any number of factory resets. Following changes made last year in Android O, app developers can no longer access the IMEI without using a new API (Build.getSerial()), which will provide the serial number only if the developer has obtained the user’s permission to read the phone state. Importantly, we have seen both […]

Immuta and the Future of Privacy Forum Release First-Ever Risk Management Framework for AI and Machine Learning

College Park, MD – June 26, 2018 – Immuta and the Future of Privacy Forum (FPF) today announced the first-ever framework for practitioners to manage risk in artificial intelligence (AI) and machine learning (ML) models. Their joint whitepaper, Beyond Explainability: A Practical Guide to Managing Risk in Machine Learning Models, provides business executives, data scientists, and compliance professionals with a strategic guide for governing the legal, privacy, and ethical risks associated with this technology.

The Top 10 (& Federal Actions): Student Privacy News (November 2017-February 2018)

[…] and confidentiality of student records” (page 49). The budget also proposes to eliminate federal funding for Statewide Longitudinal Data Systems and Regional Educational Laboratories (page 51). The USED budget request documentation also notes that “One of the significant challenges that ED will work to address is that the large number of ED applications & IT systems currently externally […]

Seeing the Big Picture on Smart TVs and Smart Home Tech

CES 2018 brought to light many exciting advancements in consumer technologies. Without a doubt, Smart TVs, Smart Homes, and voice assistants were dominant: LG has a TV that rolls up like a poster; Philips introduced a Google Assistant-enabled TV is designed for the kitchen; and Samsung revealed its new line of refrigerators, TVs, and other home devices powered by Bixby, their intelligent voice assistant.

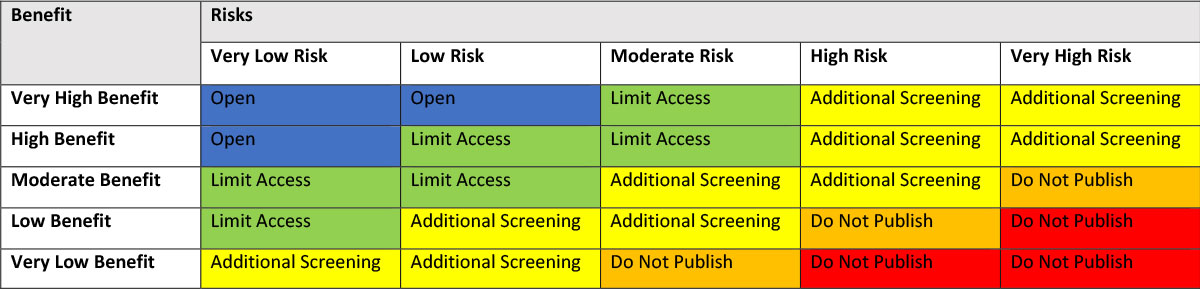

Examining the Open Data Movement

The transparency goals of the open data movement serve important social, economic, and democratic functions in cities like Seattle. At the same time, some municipal datasets about the city and its citizens’ activities carry inherent risks to individual privacy when shared publicly. In 2016, the City of Seattle declared in its Open Data Policy that the city’s data would be “open by preference,” except when doing so may affect individual privacy.[1] To ensure its Open Data Program effectively protects individuals, Seattle committed to performing an annual risk assessment and tasked the Future of Privacy Forum (FPF) with creating and deploying an initial privacy risk assessment methodology for open data.

New US Dept of Ed Finding: Schools Cannot Require Parents or Students to Waive Their FERPA Rights Through Ed Tech Company’s Terms of Service

Policymakers, parents, and privacy advocates have long asked whether FERPA is up to the task of protecting student privacy in the 21st century. A just-released letter regarding the Agora Cyber Charter School might signal that a FERPA compliance crack-down – frequently mentioned as their next step after providing extensive guidance by the U.S. Department of Education (USED) employees at conferences throughout 2017 – has begun. The Agora letter provides crucial guidance to schools and ed tech companies about how USED interprets FERPA’s requirements regarding parental consent and ed tech products’ terms of service, and it may predict USED’s enforcement priorities going forward.

Privacy Scholarship Research Reporter: Issue 3, December 2017 – 2017 Privacy Papers for Policymakers Award Winners

Notes from FPF On December 12, 2017, FPF announced the winners of our 8th Annual Privacy Papers for Policymakers (PPPM) Award. This Award recognizes leading privacy scholarship that is relevant to policymakers in the United States Congress, at U.S. federal agencies, and for data protection authorities abroad. In this special issue of the Scholarship Reporter, you […]

Unfairness By Algorithm: Distilling the Harms of Automated Decision-Making

Analysis of personal data can be used to improve services, advance research, and combat discrimination. However, such analysis can also create valid concerns about differential treatment of individuals or harmful impacts on vulnerable communities. These concerns can be amplified when automated decision-making uses sensitive data (such as race, gender, or familial status), impacts protected classes, or affects individuals’ eligibility for housing, employment, or other core services. When seeking to identify harms, it is important to appreciate the context of interactions between individuals, companies, and governments—including the benefits provided by automated decision-making frameworks, and the fallibility of human decision-making.

NAI Combines Web, Mobile, and Cross-Device Tracking Rules for 2018

The Network Advertising Initiative (NAI) released its 2018 Code of Conduct yesterday, consolidating the rules for online and mobile behavioral advertising (interest-based advertising). NAI, a non-profit organization in Washington, DC, is the leading self-regulatory association for digital advertising, with over 100 members and a formalized internal review mechanism.